Research Areas

Introduction!

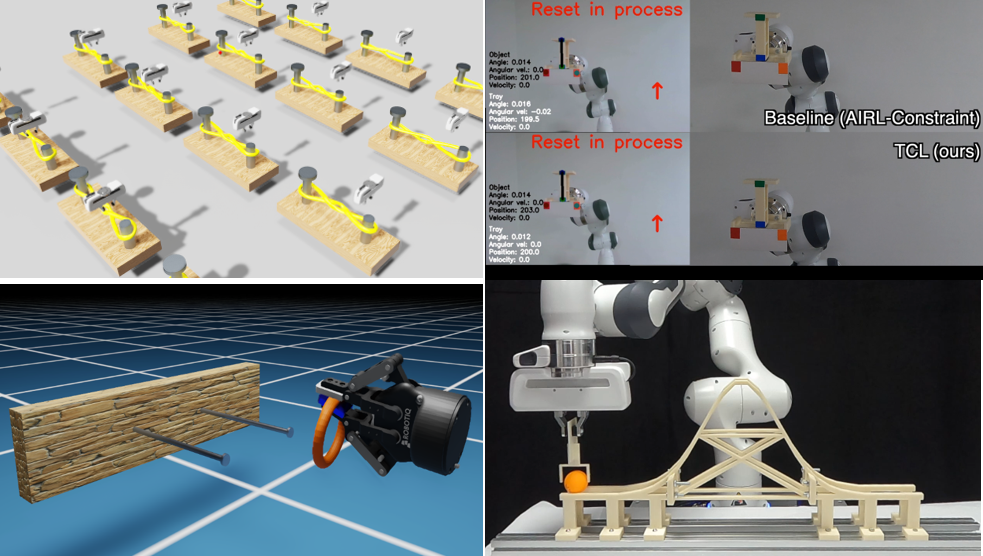

Generalist Robots in the Wild: Foundation Models for Real-World Tasks

We aim to develop fundamental learning methodologies for whole-body manipulation using humanoid robots, including a humanoid platform (e.g., Unitree G1) and a mobile humanoid platform (T.B.D). Whole-body manipulation has recently emerged as a key frontier in robotics. To lead advancements in this domain, the RIRO Lab focuses on two core directions: (1) developing novel policy learning methods based on imitation and reinforcement learning, and (2) designing high-level planning strategies that leverage large language models (LLMs) and other foundation models such as vision-language-action (VLA) models. Our long-term goal is to build hierarchical foundation models capable of generalizing across complex, real-world tasks. The proposed methods will be rigorously evaluated using our robotic platforms and through collaborative efforts with industry partners.

Keywords: Imitation learning, State-space models (SSM), Diffusion policy, Constraint learning, humanoid navigation

Selected paper: [under review]

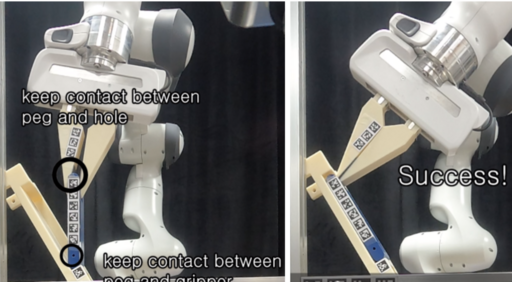

Interactive Learning Toward In-hand Manipulation of Deformable Objects

In-hand manipulation of deformable objects offers unprecedented opportunities to resolve various real-world problems, such as binding and taping. This project aims to develop a visuotactile in-hand manipulation that repositions/reorientations deformable objects in hand as we want. Toward this line of research, we propose three research thrusts: 1) a physics-informed reinforcement learning (RL) framework, 2) an interactive RL framework, and 3) Sim2Real transfer learning method.

Keywords: (Inverse) Reinforcement learning, Deformable obejct manipulation, Sim2Real transfer learning,

Selected paper: [CoRL19], [RA-L23]

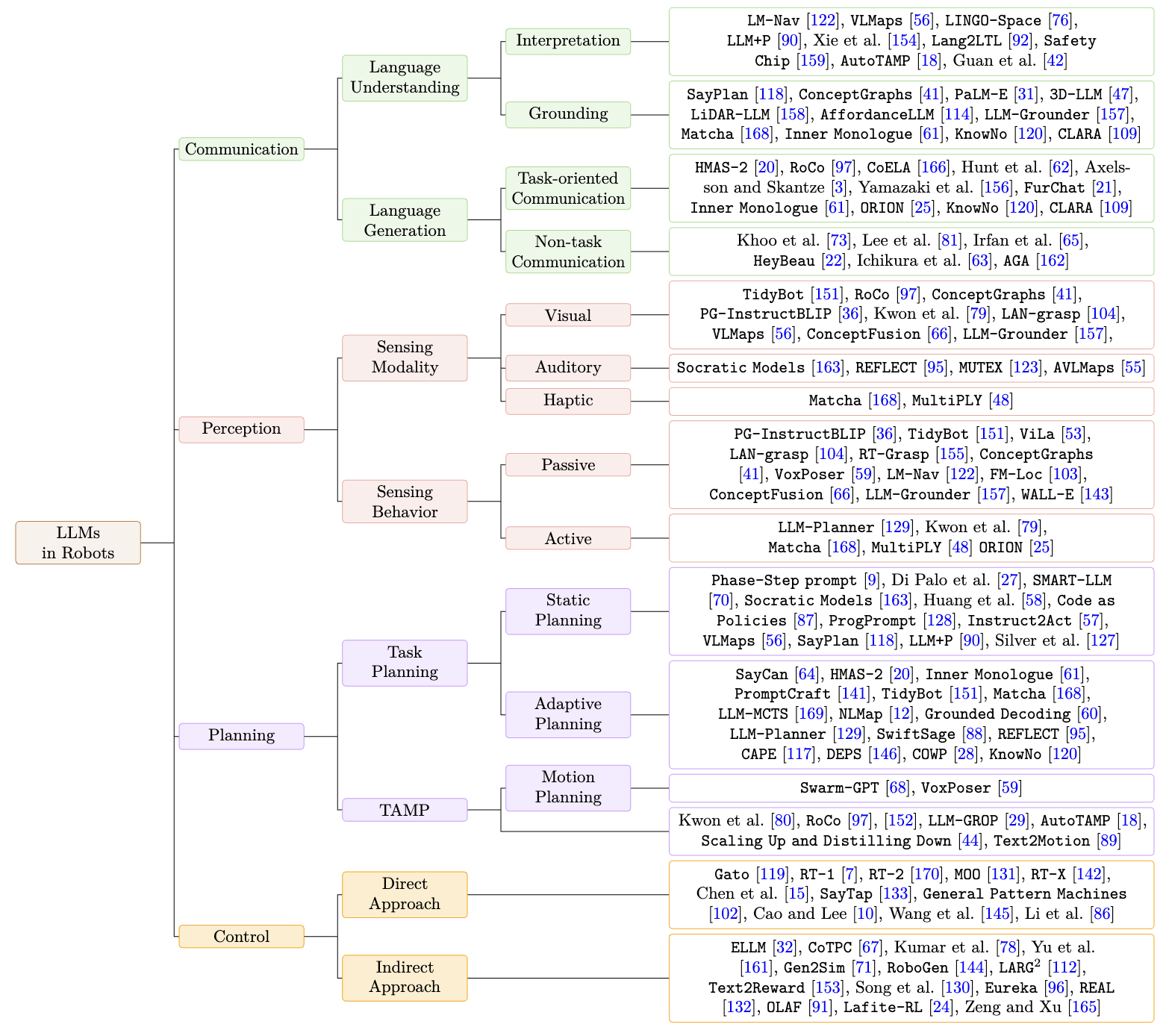

LLM/VLM-based Task-and-Motion Planning

We aim to introduce task-and-motion planning (TAMP) framework that is to solve complex and longer-time horizon of human tasks. To resolve completeness, optimality, and robustness issues, we are working on various task planning and motion planning approaches. We will show a generalizable TAMP framework under human operator’s cooperative or adversarial interventions.

Keywords: Large language models, Large multimodal models, Semantic perception, Behavior tree

Selected paper: [ICRA21], [RA-L22], [ICRA24]

Language-Guided Navigation & Manipulation

Natural language is one of the most intuitive and accessible ways for humans to convey intent, without the need for specialized tools or training. This research aims to ground natural language instructions in real-world robotic tasks involving navigation and manipulation. While conventional natural language grounding (NLG) methods often focus on mapping language to sparse goal specifications, our approach explores richer forms of grounding—spanning spatial reasoning, action understanding, and plan generation—by leveraging common-sense knowledge and motion demonstrations. A key challenge in this domain is the inherent ambiguity and implicit assumptions in human instructions. To address this, we develop frameworks that learn common-sense priors from language-motion pairs, enabling robots to interpret under-specified instructions in a context-aware manner. Our methods are evaluated on diverse mobile platforms, including quadrupedal robots such as Boston Dynamics Spot, demonstrating the effectiveness of language-guided policy learning in both structured and unstructured environments.

Keywords: Quadruped robot, Semantic SLAM, Natural language grounding, Space grounding

Selected paper: [IJRR20], [FR22], [AAAI24]